Note: native autograd support is an experimental feature in tidy3d 2.7. To see the original implementation of this notebook using jax and the adjoint plugin, refer to this notebook.

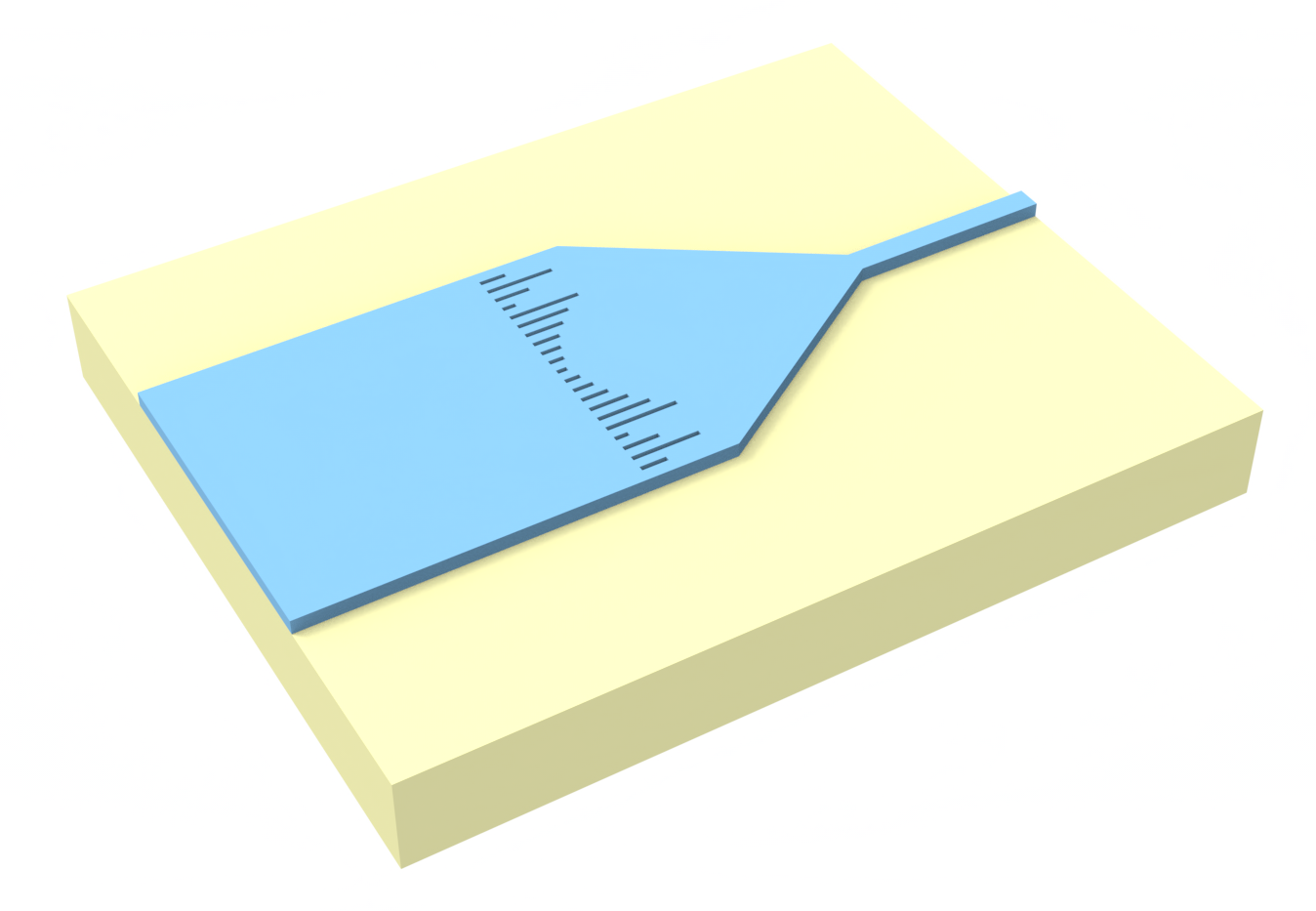

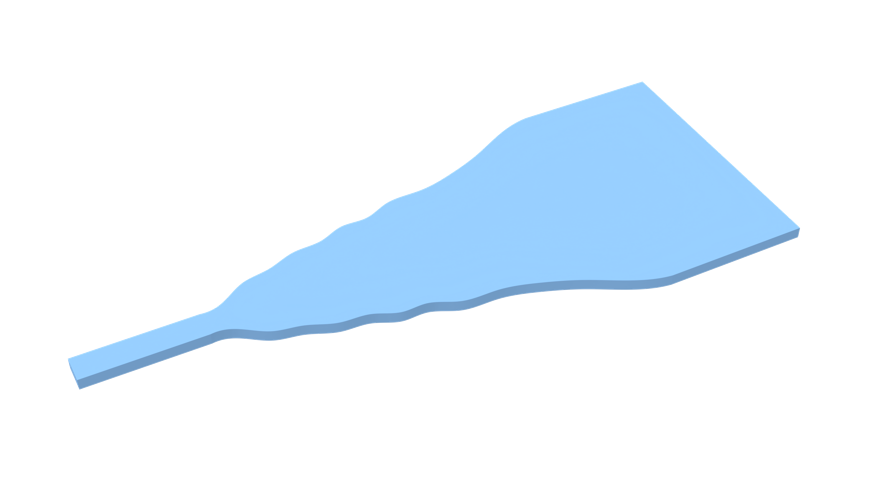

In this notebook, we will show how to use tidy3d to optimize a taper with respect to the boundaries of a structure defined using a PolySlab.

We will apply this capability to design a non-adiabatic waveguide taper between a narrow and wide waveguide, based loosely on Michaels, Andrew, and Eli Yablonovitch. "Leveraging continuous material averaging for inverse electromagnetic design." Optics express 26.24 (2018): 31717-31737.

We start by importing our typical python packages, plus tidy3d and autograd.

import autograd as ag

import autograd.numpy as anp

import matplotlib.pylab as plt

import numpy as np

import tidy3d as td

import tidy3d.web as web

Set up¶

Next we will define some basic parameters of the waveguide, such as the input and output waveguide dimensions, taper width, and taper length.

wavelength = 1.0

freq0 = td.C_0 / wavelength

wg_width_in = 0.5 * wavelength

wg_width_out = 5.0 * wavelength

wg_medium = td.material_library["Si3N4"]["Philipp1973Sellmeier"]

wg_length = 1 * wavelength

taper_length = 10.0

spc_pml = 1.5 * wavelength

Lx = wg_length + taper_length + wg_length

Ly = spc_pml + max(wg_width_in, wg_width_out) + spc_pml

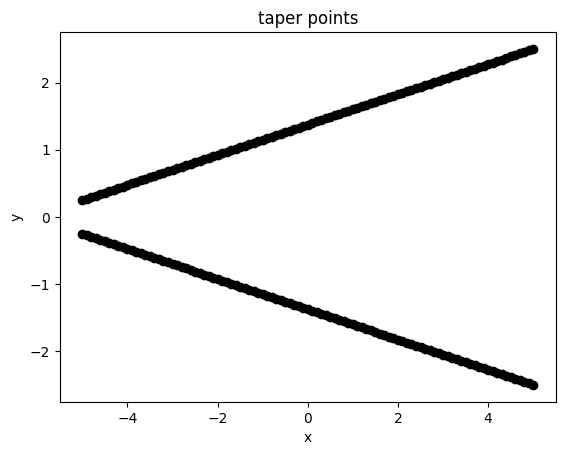

Our taper is defined as a set of num_points connected vertices in a polygon. We define the fixed x positions of each vertex and then construct the y positions for the starting device (linear taper).

num_points = 101

x_start = -taper_length / 2

x_end = +taper_length / 2

xs = np.linspace(x_start, x_end, num_points)

ys0 = (wg_width_in + (wg_width_out - wg_width_in) * (xs - x_start) / (x_end - x_start)) / 2.0

Let's plot these points to make sure they seem reasonable.

plt.plot(xs, +ys0, "ko-")

plt.plot(xs, -ys0, "ko-")

plt.xlabel("x")

plt.ylabel("y")

plt.title("taper points")

plt.show()

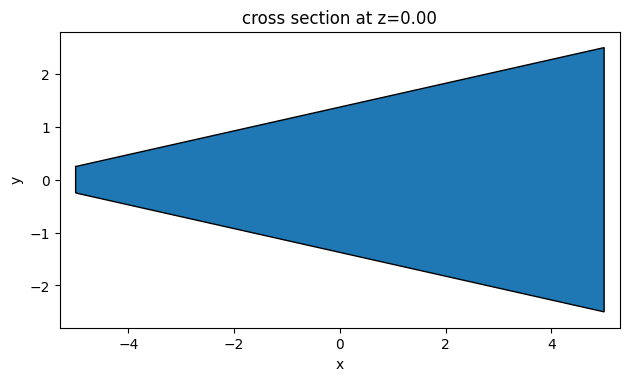

Let's wrap this in a function that constructs these points and then creates a td.PolySlab for use in the td.Simulation.

def make_taper(ys) -> td.PolySlab:

"""Create a PolySlab for the taper based on the supplied y values."""

vertices = anp.concatenate(

[

anp.column_stack((xs, ys)),

anp.column_stack((xs[::-1], -ys[::-1])),

]

)

return td.PolySlab(vertices=vertices, slab_bounds=(-td.inf, +td.inf), axis=2)

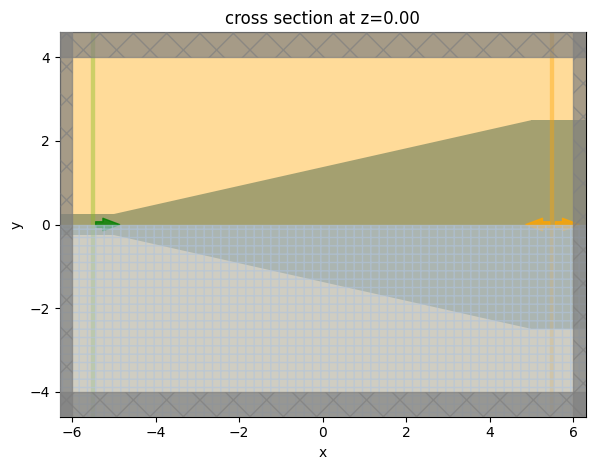

Now we'll call this function and plot the geometry for a sanity check.

# sanity check to see the polyslab

taper_geo = make_taper(ys0)

ax = taper_geo.plot(z=0)

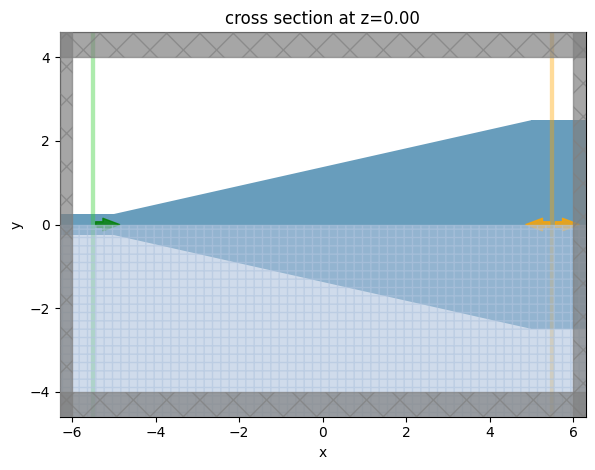

Next, let's write a function that generates a td.Simulation given a set of y coordinates for the taper, including the monitors, sources, and waveguide geometries.

def make_sim(ys, include_field_mnt: bool = False) -> td.Simulation:

"""Make a td.Simulation containing the taper."""

wg_in_box = td.Box.from_bounds(

rmin=(-Lx, -wg_width_in / 2, -td.inf),

rmax=(-Lx / 2 + wg_length + 0.01, +wg_width_in / 2, +td.inf),

)

wg_out_box = td.Box.from_bounds(

rmin=(+Lx / 2 - wg_length - 0.01, -wg_width_out / 2, -td.inf),

rmax=(+Lx, +wg_width_out / 2, +td.inf),

)

taper_geo = make_taper(ys)

wg_in = td.Structure(geometry=wg_in_box, medium=wg_medium)

wg_out = td.Structure(geometry=wg_out_box, medium=wg_medium)

taper = td.Structure(geometry=taper_geo, medium=wg_medium)

mode_source = td.ModeSource(

center=(-Lx / 2 + wg_length / 2, 0, 0),

size=(0, td.inf, td.inf),

source_time=td.GaussianPulse(freq0=freq0, fwidth=freq0 / 10),

direction="+",

)

mode_monitor = td.ModeMonitor(

center=(+Lx / 2 - wg_length / 2, 0, 0),

size=(0, td.inf, td.inf),

freqs=[freq0],

mode_spec=td.ModeSpec(),

name="mode",

)

field_monitor = td.FieldMonitor(

center=(0, 0, 0),

size=(td.inf, td.inf, 0),

freqs=[freq0],

name="field",

)

return td.Simulation(

size=(Lx, Ly, 0),

structures=[taper, wg_in, wg_out],

monitors=[mode_monitor, field_monitor] if include_field_mnt else [mode_monitor],

sources=[mode_source],

run_time=100 / freq0,

grid_spec=td.GridSpec.auto(min_steps_per_wvl=20),

boundary_spec=td.BoundarySpec.pml(x=True, y=True, z=False),

symmetry=(0, +1, 0),

)

sim = make_sim(ys0)

ax = sim.plot(z=0)

Defining Objective¶

Now that we have a function to create our td.Simulation, we need to define our objective function.

We will try to optimize the power transmission into the fundamental mode on the output waveguide, so we write a function to postprocess a td.SimulationData to give this result.

def measure_transmission(sim_data: td.SimulationData) -> float:

"""Measure the first order transmission."""

amp_data = sim_data["mode"].amps

amp = amp_data.sel(f=freq0, direction="+", mode_index=0).values

return anp.sum(abs(amp) ** 2)

Next, we will define a few convenience functions to generate our taper y values passed on our objective function parameters.

We define a set of parameters that can range from -infinity to +infinity, but project onto the range [wg_width_out and wg_width_in] through a tanh() function.

We do this to constrain the taper values within this range in a smooth and differentiable way.

We also write an inverse function to get the parameters given a set of desired y values and assert that this function works properly.

def get_ys(parameters: np.ndarray) -> np.ndarray:

"""Convert arbitrary parameters to y values for the vertices (parameter (-inf, inf) -> wg width of (wg_width_out, wg_width_in)."""

params_between_0_1 = (anp.tanh(np.pi * parameters) + 1.0) / 2.0

params_scaled = params_between_0_1 * (wg_width_out - wg_width_in) / 2.0

ys = params_scaled + wg_width_in / 2

return ys

def get_params(ys: np.ndarray) -> np.ndarray:

"""inverse of above, get parameters from ys"""

params_scaled = ys - wg_width_in / 2

params_between_0_1 = 2 * params_scaled / (wg_width_out - wg_width_in)

tanh_pi_params = 2 * params_between_0_1 - 1

params = np.arctanh(tanh_pi_params) / np.pi

return params

# assert that the inverse function works properly

params_test = 2 * (np.random.random((10,)) - 0.5)

ys_test = get_ys(params_test)

assert np.allclose(get_params(ys_test), params_test)

We then make a function that simply wraps our previously defined functions to generate a Simulation given some parameters.

def make_sim_params(parameters: np.ndarray, include_field_mnt: bool = False) -> td.Simulation:

"""Make the simulation out of raw parameters."""

ys = get_ys(parameters)

return make_sim(ys, include_field_mnt=include_field_mnt)

Smoothness Penalty¶

It is important to ensure that the final device does not contain feature sizes below a minimum radius of curvature, otherwise there could be considerable difficulty in fabricating the device reliably.

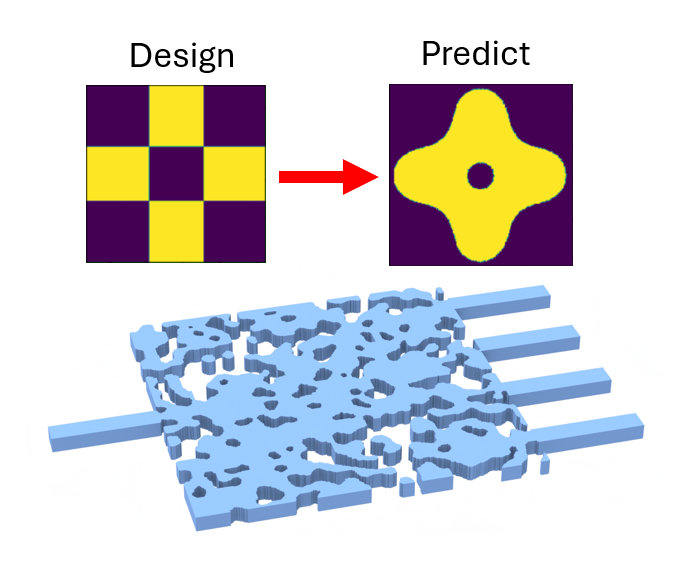

For this, the autograd plugin introduces a penalty function that approximates the radius of curvature about each vertex and introduces a significant penalty to the objective function if that value is below a minimum radius of curvature.

The local radii are determined by fitting a quadratic Bezier curve to each set of 3 adjacent points in the taper and analytically computing the radius of curvature from that curve fit. The details of this calculation are described in the paper linked at the introduction of this notebook.

from tidy3d.plugins.autograd import make_curvature_penalty

curvature_penalty = make_curvature_penalty(min_radius=0.15)

def penalty(params: np.ndarray) -> float:

"""Compute penalty for a set of parameters."""

ys = get_ys(params)

points = anp.array([xs, ys]).T

return curvature_penalty(points)

Initial Starting Design¶

As our initial design, we take a linear taper between the two waveguides.

# desired ys

ys0 = np.linspace(wg_width_in / 2 + 0.001, wg_width_out / 2 - 0.001, num_points)

# corresponding parameters

params0 = get_params(ys0)

# make the simulation corresponding to these parameters

sim = make_sim_params(params0, include_field_mnt=True)

ax = sim.plot(z=0)

Lets get the penalty value corresponding to this design, which is 0 because the edges are straight (infinite radius of curvature).

penalty_value = penalty(params0)

print(f"starting penalty = {float(penalty_value):.2e}")

starting penalty = 0.00e+00

Finally, let's run this simulation to get a feeling for the initial device performance.

sim_data = web.run(sim, task_name="taper fields")

10:44:15 EST Created task 'taper fields' with task_id 'fdve-e1fca213-0f3a-4bb6-b99a-0fdf73dec837' and task_type 'FDTD'.

View task using web UI at 'https://tidy3d.simulation.cloud/workbench?taskId=fdve-e1fca213-0f3 a-4bb6-b99a-0fdf73dec837'.

Output()

10:44:16 EST status = queued

To cancel the simulation, use 'web.abort(task_id)' or 'web.delete(task_id)' or abort/delete the task in the web UI. Terminating the Python script will not stop the job running on the cloud.

Output()

10:44:20 EST status = preprocess

10:44:23 EST Maximum FlexCredit cost: 0.025. Use 'web.real_cost(task_id)' to get the billed FlexCredit cost after a simulation run.

starting up solver

running solver

Output()

10:44:27 EST early shutoff detected at 40%, exiting.

status = postprocess

Output()

10:44:29 EST status = success

View simulation result at 'https://tidy3d.simulation.cloud/workbench?taskId=fdve-e1fca213-0f3 a-4bb6-b99a-0fdf73dec837'.

Output()

10:44:32 EST loading simulation from simulation_data.hdf5

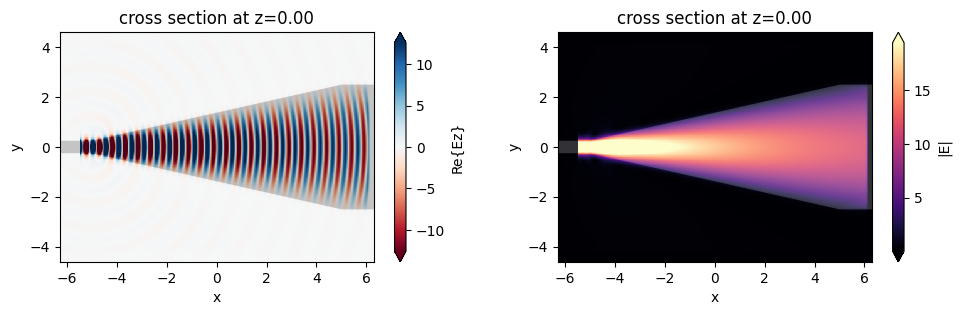

f, (ax1, ax2) = plt.subplots(1, 2, tight_layout=True, figsize=(10, 3))

sim_data.plot_field(field_monitor_name="field", field_name="Ez", val="real", ax=ax1)

sim_data.plot_field(field_monitor_name="field", field_name="E", val="abs", ax=ax2)

plt.show()

Gradient-based Optimization¶

Now that we have our design and post processing functions set up, we are finally ready to put everything together to start optimizing our device with inverse design.

We first set up an objective function that takes the parameters, sets up and runs the simulation, and returns the transmission minus the penalty of the parameters.

def objective(parameters: np.ndarray, verbose: bool = False) -> float:

"""Construct simulation, run, and measure transmission."""

sim = make_sim_params(parameters, include_field_mnt=False)

sim_data = web.run(sim, task_name="autograd taper", verbose=verbose)

return measure_transmission(sim_data) - penalty(parameters)

To test our our objective, we will use autograd to make and run a function that returns the objective value and its gradient.

grad_fn = ag.value_and_grad(objective)

val, grad = grad_fn(params0, verbose=True)

10:44:33 EST Created task 'autograd taper' with task_id 'fdve-db89418d-9727-43a3-b1cb-12c8f9fa03d0' and task_type 'FDTD'.

View task using web UI at 'https://tidy3d.simulation.cloud/workbench?taskId=fdve-db89418d-972 7-43a3-b1cb-12c8f9fa03d0'.

Output()

10:44:34 EST status = queued

To cancel the simulation, use 'web.abort(task_id)' or 'web.delete(task_id)' or abort/delete the task in the web UI. Terminating the Python script will not stop the job running on the cloud.

Output()

10:44:39 EST status = preprocess

10:44:41 EST Maximum FlexCredit cost: 0.025. Use 'web.real_cost(task_id)' to get the billed FlexCredit cost after a simulation run.

starting up solver

running solver

Output()

10:44:45 EST early shutoff detected at 40%, exiting.

status = postprocess

Output()

10:44:47 EST status = success

10:44:48 EST View simulation result at 'https://tidy3d.simulation.cloud/workbench?taskId=fdve-db89418d-972 7-43a3-b1cb-12c8f9fa03d0'.

Output()

10:44:50 EST loading simulation from simulation_data.hdf5

Created task 'autograd taper_adjoint' with task_id 'fdve-3b2828d7-348d-49cc-8bbe-8aea9e6b578e' and task_type 'FDTD'.

View task using web UI at 'https://tidy3d.simulation.cloud/workbench?taskId=fdve-3b2828d7-348 d-49cc-8bbe-8aea9e6b578e'.

Output()

10:44:52 EST status = queued

To cancel the simulation, use 'web.abort(task_id)' or 'web.delete(task_id)' or abort/delete the task in the web UI. Terminating the Python script will not stop the job running on the cloud.

Output()

10:44:58 EST status = preprocess

10:45:00 EST Maximum FlexCredit cost: 0.025. Use 'web.real_cost(task_id)' to get the billed FlexCredit cost after a simulation run.

starting up solver

running solver

Output()

10:45:03 EST early shutoff detected at 60%, exiting.

status = postprocess

Output()

10:45:05 EST status = success

10:45:06 EST View simulation result at 'https://tidy3d.simulation.cloud/workbench?taskId=fdve-3b2828d7-348 d-49cc-8bbe-8aea9e6b578e'.

Output()

10:45:08 EST loading simulation from simulation_data_adjoint.hdf5

print(f"objective = {val:.2e}")

print(f"gradient = {np.nan_to_num(grad)}")

objective = 7.22e-01 gradient = [ 2.76000293e-03 1.02699094e-01 5.50668603e-02 -1.24014028e-01 -2.58949492e-01 -3.46533764e-01 -3.91238111e-01 -3.20571189e-01 -2.16951850e-01 -8.90745191e-02 1.02571286e-01 1.54245170e-01 4.89177040e-02 1.23377127e-02 9.00304483e-02 2.27608112e-01 3.31118337e-01 3.83140486e-01 4.29783271e-01 3.88477615e-01 3.04638887e-01 2.33256556e-01 1.27787339e-01 9.75866504e-02 1.07413052e-01 5.27193031e-02 1.00461924e-02 -2.53550265e-02 -5.20957776e-02 -4.59234276e-02 -3.31893723e-02 4.83642808e-03 3.23931361e-02 3.68979721e-02 4.22826410e-02 3.29331056e-02 3.96374947e-02 4.77820695e-02 2.70129711e-02 1.08400227e-02 -5.72545414e-03 -3.03500277e-02 -4.06373498e-02 -4.00499540e-02 -4.21587014e-02 -4.27064656e-02 -4.01792577e-02 -3.59955937e-02 -2.68672685e-02 -2.34838193e-02 -2.46270886e-02 -2.81082065e-02 -3.65238330e-02 -3.91798271e-02 -4.46301852e-02 -4.81170808e-02 -4.66103472e-02 -5.04064234e-02 -4.46779052e-02 -4.09772206e-02 -4.29115837e-02 -3.97128660e-02 -4.34250277e-02 -4.33987278e-02 -4.26534901e-02 -4.53903578e-02 -4.11334164e-02 -4.06943062e-02 -3.85535730e-02 -3.47947436e-02 -3.62180171e-02 -3.44964366e-02 -3.34523955e-02 -3.21109293e-02 -2.94014015e-02 -2.76413081e-02 -2.48044074e-02 -2.37877655e-02 -2.25504584e-02 -2.07847659e-02 -1.92861057e-02 -1.71183771e-02 -1.55353807e-02 -1.38659044e-02 -1.27596618e-02 -1.11512018e-02 -9.63881993e-03 -8.70273770e-03 -7.05635161e-03 -6.43114772e-03 -5.39941016e-03 -4.23111343e-03 -3.58761302e-03 -2.52402462e-03 -2.12286108e-03 -1.41506841e-03 -8.26550782e-04 -6.18685454e-04 -2.03187188e-04 -9.11642867e-05 -3.18955136e-06]

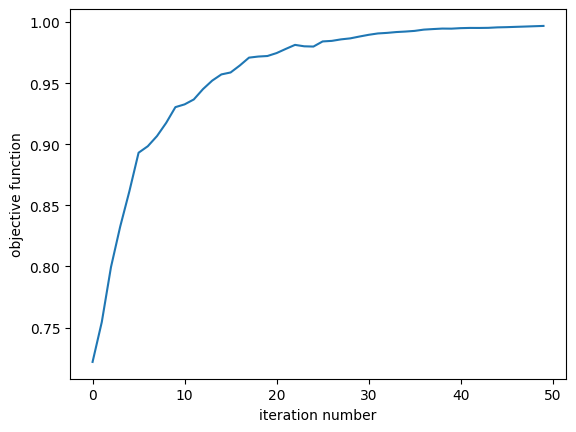

Now we can run optax's Adam optimization loop with this value_and_grad() function. See tutorial 3 for more details on the implementation.

import optax

# turn off warnings to reduce verbosity

# td.config.logging_level = "ERROR"

# hyperparameters

num_steps = 50

learning_rate = 0.0075

# initialize adam optimizer with starting parameters

params = np.array(params0).copy()

optimizer = optax.adam(learning_rate=learning_rate)

opt_state = optimizer.init(params)

# store history

objective_history = []

param_history = [params]

for i in range(num_steps):

# compute gradient and current objective function value

value, gradient = grad_fn(params, verbose=False)

# convert nan to 0 (infinite radius of curvature) and multiply all by -1 to maximize obj_fn.

# outputs

print(f"step = {i + 1}")

print(f"\tJ = {value:.4e}")

print(f"\tgrad_norm = {np.linalg.norm(gradient):.4e}")

# compute and apply updates to the optimizer based on gradient

updates, opt_state = optimizer.update(-gradient, opt_state, params)

params[:] = optax.apply_updates(params, updates)

# save history

objective_history.append(value)

param_history.append(params)

step = 1 J = 7.2186e-01 grad_norm = 1.2149e+00 step = 2 J = 7.5444e-01 grad_norm = 2.6780e+00 step = 3 J = 7.9946e-01 grad_norm = 3.8562e+00 step = 4 J = 8.3257e-01 grad_norm = 3.1312e+00 step = 5 J = 8.6154e-01 grad_norm = 3.3225e+00 step = 6 J = 8.9307e-01 grad_norm = 1.8977e+00 step = 7 J = 8.9838e-01 grad_norm = 4.0921e+00 step = 8 J = 9.0670e-01 grad_norm = 4.2156e+00 step = 9 J = 9.1752e-01 grad_norm = 3.5482e+00 step = 10 J = 9.3038e-01 grad_norm = 3.1259e+00 step = 11 J = 9.3264e-01 grad_norm = 4.1750e+00 step = 12 J = 9.3667e-01 grad_norm = 3.9546e+00 step = 13 J = 9.4514e-01 grad_norm = 3.9537e+00 step = 14 J = 9.5209e-01 grad_norm = 4.1436e+00 step = 15 J = 9.5717e-01 grad_norm = 3.7688e+00 step = 16 J = 9.5876e-01 grad_norm = 3.6146e+00 step = 17 J = 9.6447e-01 grad_norm = 3.2351e+00 step = 18 J = 9.7089e-01 grad_norm = 3.0518e+00 step = 19 J = 9.7178e-01 grad_norm = 2.6888e+00 step = 20 J = 9.7222e-01 grad_norm = 3.2244e+00 step = 21 J = 9.7463e-01 grad_norm = 2.9934e+00 step = 22 J = 9.7805e-01 grad_norm = 2.3418e+00 step = 23 J = 9.8133e-01 grad_norm = 1.6570e+00 step = 24 J = 9.8017e-01 grad_norm = 2.3101e+00 step = 25 J = 9.7996e-01 grad_norm = 2.3219e+00 step = 26 J = 9.8415e-01 grad_norm = 1.2747e+00 step = 27 J = 9.8461e-01 grad_norm = 1.6170e+00 step = 28 J = 9.8584e-01 grad_norm = 1.5162e+00 step = 29 J = 9.8666e-01 grad_norm = 1.7337e+00 step = 30 J = 9.8816e-01 grad_norm = 1.4281e+00 step = 31 J = 9.8957e-01 grad_norm = 9.5692e-01 step = 32 J = 9.9069e-01 grad_norm = 1.0903e+00 step = 33 J = 9.9115e-01 grad_norm = 1.1717e+00 step = 34 J = 9.9183e-01 grad_norm = 9.4896e-01 step = 35 J = 9.9224e-01 grad_norm = 1.0512e+00 step = 36 J = 9.9278e-01 grad_norm = 9.8754e-01 step = 37 J = 9.9382e-01 grad_norm = 5.9541e-01 step = 38 J = 9.9427e-01 grad_norm = 7.0872e-01 step = 39 J = 9.9468e-01 grad_norm = 6.0102e-01 step = 40 J = 9.9461e-01 grad_norm = 8.0735e-01 step = 41 J = 9.9503e-01 grad_norm = 5.3409e-01 step = 42 J = 9.9521e-01 grad_norm = 4.1366e-01 step = 43 J = 9.9518e-01 grad_norm = 4.9884e-01 step = 44 J = 9.9528e-01 grad_norm = 5.4485e-01 step = 45 J = 9.9566e-01 grad_norm = 3.8565e-01 step = 46 J = 9.9584e-01 grad_norm = 4.1186e-01 step = 47 J = 9.9609e-01 grad_norm = 3.6427e-01 step = 48 J = 9.9633e-01 grad_norm = 3.3885e-01 step = 49 J = 9.9660e-01 grad_norm = 2.8181e-01 step = 50 J = 9.9685e-01 grad_norm = 2.0779e-01

params_best = param_history[-1]

We see that our objective has increased steadily from a transmission of 56% to about 95%!

ax = plt.plot(objective_history)

plt.xlabel("iteration number")

plt.ylabel("objective function")

plt.show()

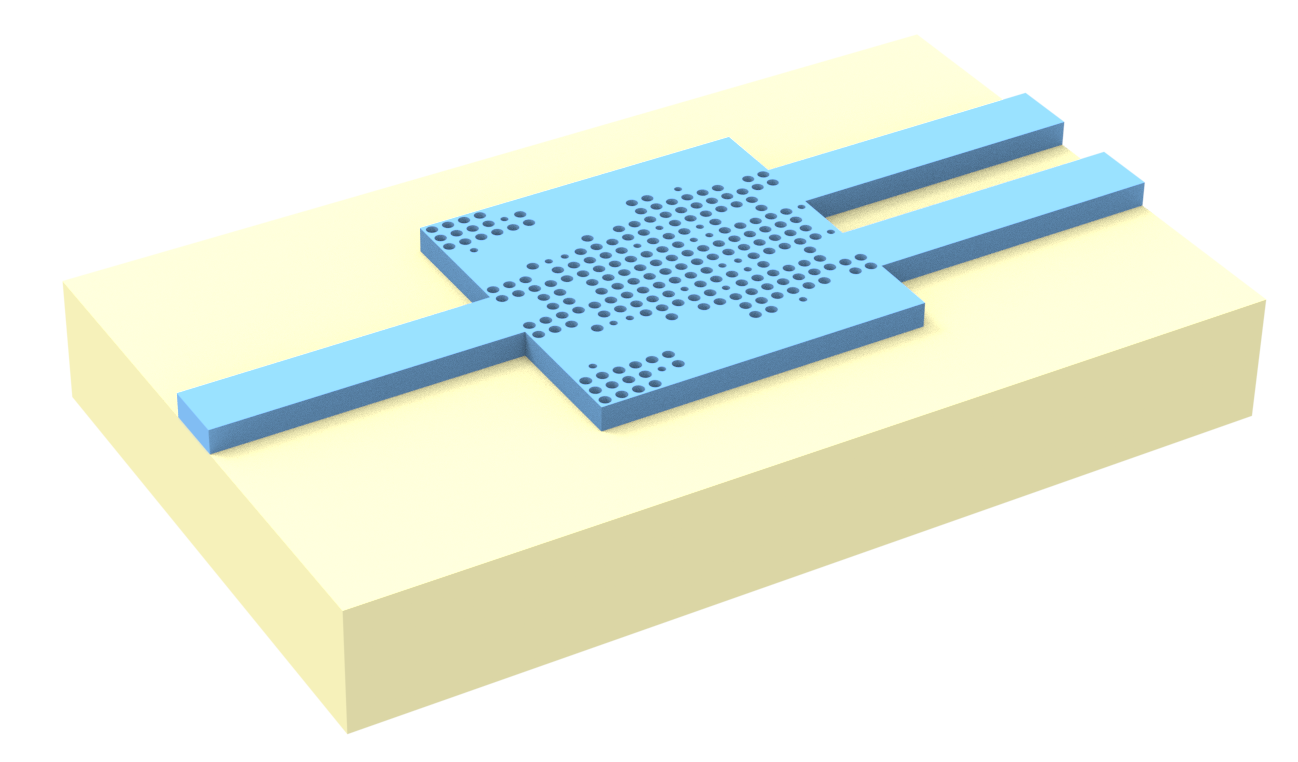

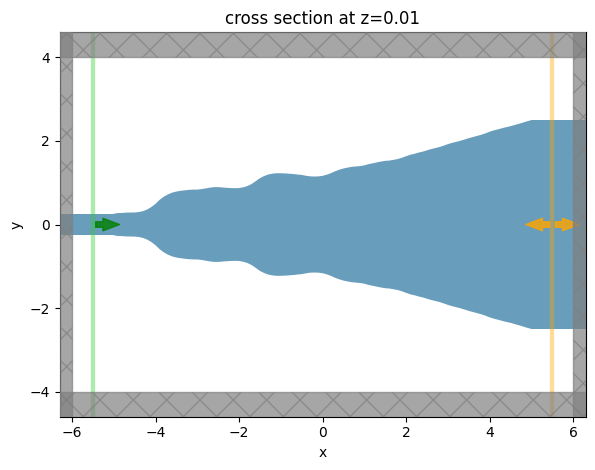

Our final device displays smooth features and no sharp corners. Without our penalty this would have not been the case!

sim_best = make_sim_params(param_history[-1], include_field_mnt=True)

ax = sim_best.plot(z=0.01)

sim_data_best = td.web.run(sim_best, task_name="taper final")

11:14:03 EST Created task 'taper final' with task_id 'fdve-7e386360-0696-4060-b708-b7b28c48cd38' and task_type 'FDTD'.

View task using web UI at 'https://tidy3d.simulation.cloud/workbench?taskId=fdve-7e386360-069 6-4060-b708-b7b28c48cd38'.

Output()

11:14:05 EST status = queued

To cancel the simulation, use 'web.abort(task_id)' or 'web.delete(task_id)' or abort/delete the task in the web UI. Terminating the Python script will not stop the job running on the cloud.

Output()

11:14:13 EST status = preprocess

11:14:15 EST Maximum FlexCredit cost: 0.025. Use 'web.real_cost(task_id)' to get the billed FlexCredit cost after a simulation run.

starting up solver

running solver

Output()

11:14:20 EST early shutoff detected at 44%, exiting.

status = postprocess

Output()

11:14:21 EST status = success

View simulation result at 'https://tidy3d.simulation.cloud/workbench?taskId=fdve-7e386360-069 6-4060-b708-b7b28c48cd38'.

Output()

11:14:25 EST loading simulation from simulation_data.hdf5

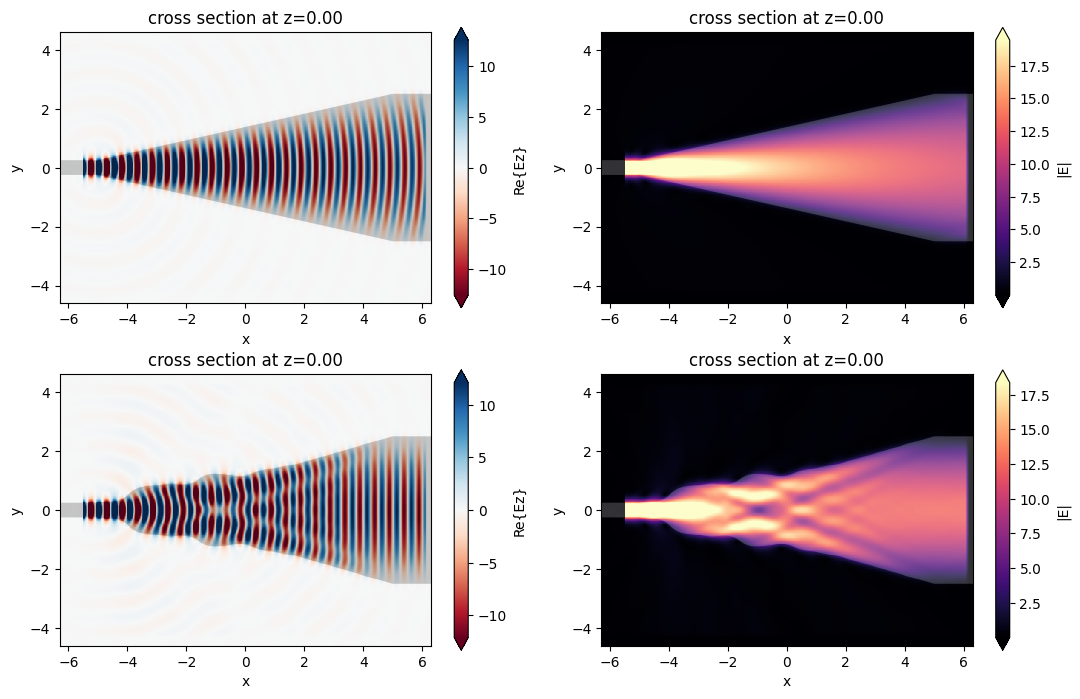

Comparing the field patterns, we see that the optimized device gives a much more uniform field profile at the output waveguide, as desired. One can further check that this device and field pattern matches the referenced paper quite nicely!

f, ((ax1, ax2), (ax3, ax4)) = plt.subplots(2, 2, tight_layout=True, figsize=(11, 7))

# plot original

sim_data.plot_field(field_monitor_name="field", field_name="Ez", val="real", ax=ax1)

sim_data.plot_field(field_monitor_name="field", field_name="E", val="abs", ax=ax2)

# plot optimized

sim_data_best.plot_field(field_monitor_name="field", field_name="Ez", val="real", ax=ax3)

sim_data_best.plot_field(field_monitor_name="field", field_name="E", val="abs", ax=ax4)

plt.show()

transmission_start = float(measure_transmission(sim_data))

transmission_end = float(measure_transmission(sim_data_best))

print(

f"Transmission improved from {(transmission_start * 100):.2f}% to {(transmission_end * 100):.2f}%"

)

Transmission improved from 72.19% to 99.74%

loss_db = -10 * np.log10(0.9974)

print(loss_db)

0.011306361176558092