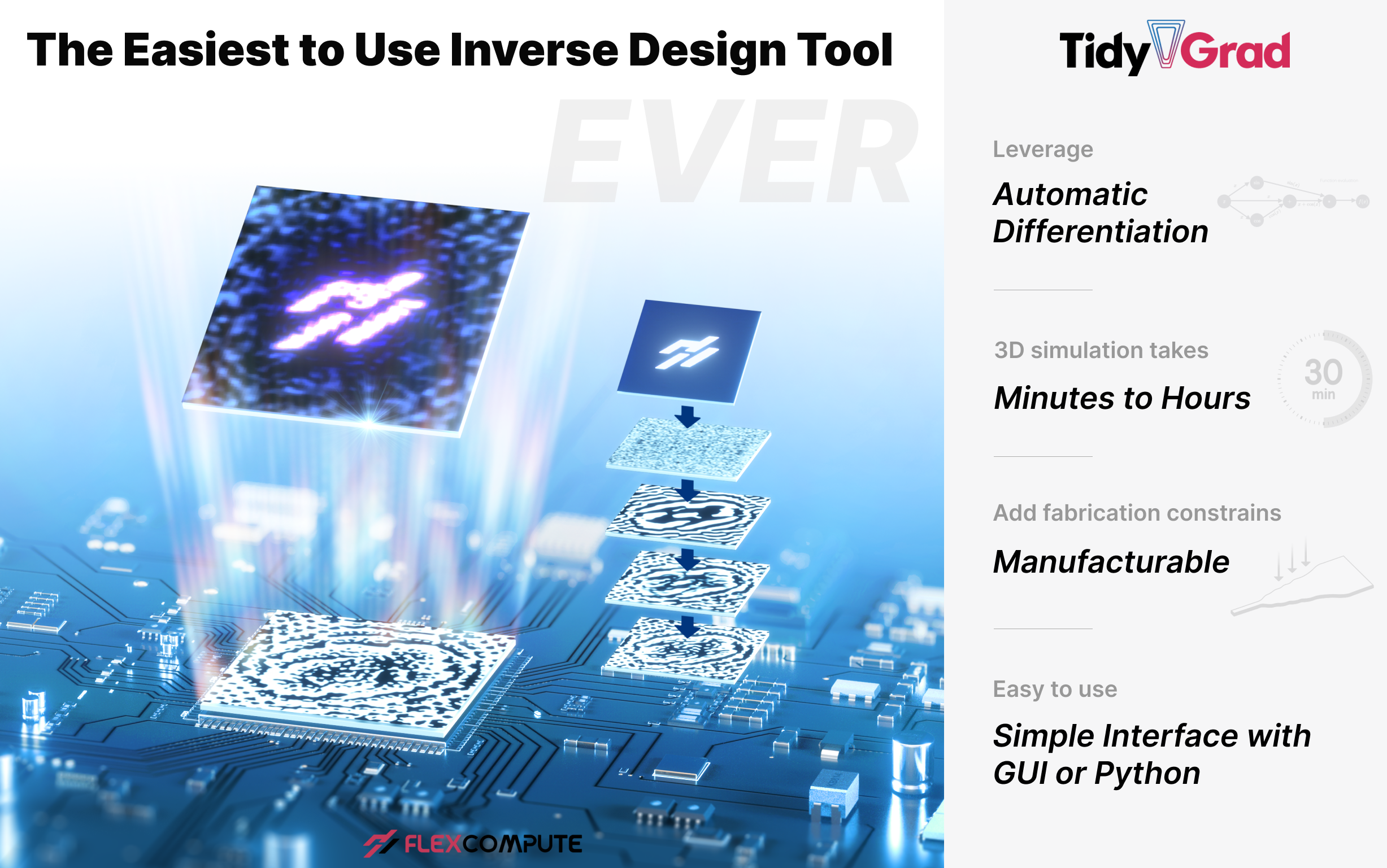

The Simplest Tool for Inverse Design

What is inverse design?

Inverse design is a method where you can automatically generate photonic devices that fulfill a custom performance metric and design criteria. One first defines the objective function to maximize with respect to a set of design parameters (such as geometric or material properties) and constraints. This objective is maximized using a gradient-based optimization algorithm, yielding a device that satisfies the performance specifications, while often displaying unintuitive designs that defy human intuition and outperform conventional approaches. This technique is enabled by the “adjoint” method, which allows one to compute the gradients needed using only one additional simulation, even if the gradient has thousands or millions of elements, as is common in many inverse design applications.

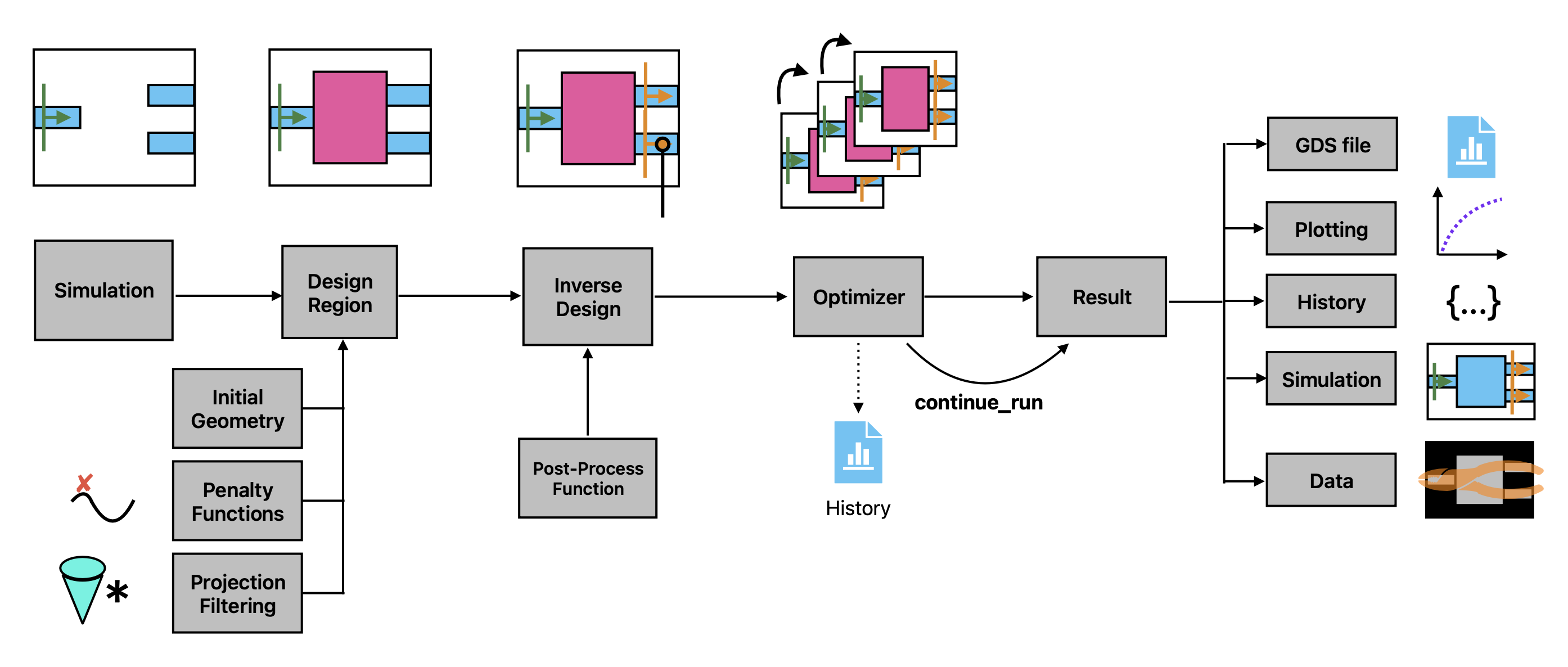

How does TidyGrad work?

TidyGrad uses automatic differentiation (AD) to make this inverse designing process as simple as possible. The TidyGrad simulation code is integrated directly within common platforms for training machine learning models. TidyGrad informs these platforms how to compute derivatives for FDTD simulations using the adjoint method and the AD tools handle the rest. As a result, one can write an objective function in regular python code involving one or many Tidy3D simulations and arbitrary pre and post processing. Then gradients of this function are computed efficiently using the adjoint method, with just a single line of code and without deriving any derivatives.

The simulations are backed by cloud-based GPU solvers, making them fast and enabling large scale 3D inverse design problems.

Why is it better than the other similar tools?

Other products require their users to use one of a select few supported operations. This is extremely limiting when designing objectives that do more than just the very basic operations. Because TidyGrad leverages automatic differentiation to handle everything around the simulation, native python and numpy code are all differentiable, making possible extremely flexible and custom metrics. We can support differentiation with respect to most of our simulation specifications and data outputs, enabling tons of possibilities.

TidyGrad’s adjoint code is general, well tested, and backed by massively parallel GPU solvers making it extremely fast. And the front end code interfaces seamlessly with python packages for machine learning, scientific computing, and visualization.

How to use TidyGrad?

All the user needs to do is write their objective as a regular python function metric = f(params) taking the design parameters and returning the metric as a single number. Then a single line of code can transform this function into one that returns the gradient using the adjoint method gradient = grad(f)(params). The resulting gradient can be plugged into an open source or custom optimizer of your choice. See the example for for inspiration.

How to quickly get started?

No matter whether you are a GUI user or Python enthusiasts, we recommend you start from this document and then go through a couple of examples after thatf. If you want to focus on GUI, we have prepared an example for you.

If you want a refresher on the concepts first, the inverse design course by Tyler and Shanhui is a useful tutorial. Tyler’s presentation is a useful introduction from fundamental physics to practical applications.